If you have connected a second monitor or TV to your laptop or computer via HDMI, Display Port, VGA or DVI, everything usually works right away without the need for any additional settings (except for selecting the dual monitor display mode). However, sometimes it happens that Windows does not see the second monitor and it is not always clear why this happens and how to fix the situation.

This manual will detail why the system may not see a second connected monitor, TV or other screen and possible ways to fix the problem. Further, it is assumed that both monitors are guaranteed to work.

Checking the connection and basic parameters of the second display

Before proceeding with any additional, more complex methods of solving the problem, if it is impossible to display an image on a second monitor, I recommend following these simple steps (most likely, you have already tried this, but I will remind you for novice users):

- Double-check that all cable connections on both the monitor and video card sides are in order and that the monitor is turned on. Even if you are sure that everything is fine.

- If you have Windows 10, go to the display settings (right click on the desktop - display settings) and in the “Display” - “Multiple displays” section, click “Detect”, perhaps this will help “see” the second monitor.

- If you have Windows 7 or 8, go to Display Settings and click Find, Windows may be able to detect the second connected monitor.

- If you have two monitors displayed in the parameters from step 2 or 3, but there is an image on only one, make sure that the “Multiple displays” item is not set to “Show only on 1” or “Show only on 2”.

- If you have a PC and one monitor is connected to a discrete video card (outputs on a separate video card) and the other to an integrated one (outputs on the rear panel, but from the motherboard), if possible, try connecting both monitors to a discrete video card.

- If you have Windows 10 or 8, you just connected a second monitor, but did not reboot (but only shutdown - connect the monitor - turn on the computer), just reboot, it may work.

- Open Device Manager - Monitors and check, is there one or two monitors? If there are two, but one with an error, try deleting it, and then select “Action” - “Update hardware configuration” from the menu.

If all these points have been checked and no problems are found, we will try additional options to fix the problem.

Note: if adapters, adapters, converters, docking stations, and also the cheapest Chinese cable recently purchased are used to connect a second monitor, each of them can also cause the problem (a little more about this and some nuances in the last section of the article). If this is possible, try checking other connection options and see if the second monitor becomes available for display.

Power check

First of all, it’s worth starting with the simplest. No matter how absurd it may seem, sometimes everything is too obvious and it doesn’t occur to anyone. Therefore, if the computer does not see the monitor, then you need to check whether the cable has come loose and whether the device’s power button is pressed. This should be indicated by a special light indicator.

If the monitor is working properly (at least it is properly connected) and there is no image on it, then you should try pressing the menu button, which opens the screen settings control panel.

Video card drivers

Unfortunately, a very common situation among novice users is trying to update a driver in Device Manager, receiving a message that the most suitable driver is already installed, and then being convinced that the driver is really updated.

In fact, such a message only says that Windows does not have other drivers and you may well be told that the driver is installed when “Standard VGA Graphics Adapter” or “Microsoft Basic Video Adapter” is displayed in Device Manager (both of these options indicate that that the driver was not found and a standard driver was installed, which can only perform basic functions and usually does not work with multiple monitors).

Therefore, if you have problems connecting a second monitor, I strongly recommend installing the video card driver manually:

- Download the driver for your video card from the official NV website > Another option related to drivers is possible: the second monitor worked, but suddenly it stopped being detected. This may indicate that Windows has updated the video card driver. Try going to the device manager, opening the properties of your video card and on the “Driver” tab, roll back the driver.

No sound or picture

Alternatively, disconnect all additional connections from your TV: set-top boxes, USB flash drives, audio system. Restart the connection again. Alternatively, you can try updating the drivers for your video card. In fact, I have a separate article on solving this problem at this link. The problem is solved by connecting via HDMI, but there is no difference - try those tips too, they help many.

Many users tend to connect their TV to their PC, and while this usually works, many have reported that Windows 10 does not recognize their TV. This can be an annoying problem, but in today's article we will show you how to fix it once and for all.

Additional information that may help when the second monitor is not detected

In conclusion, some additional nuances that may help figure out why the second monitor is not visible in Windows:

- If one monitor is connected to a discrete video card and the other to an integrated video card, check whether both video cards are visible in Device Manager. It happens that the BIOS disables the integrated video adapter when there is a discrete one (but it can be enabled in the BIOS).

- Check whether the second monitor is visible in the proprietary control panel of the video card (for example, in the “NV Control Panel >If your situation differs from all the proposed options, and your computer or laptop still does not see the monitor, describe in the comments how exactly, what video card the displays are connected to and other details of the problem - perhaps I can help.

Today we will connect an external monitor to a laptop, netbook, or ultrabook and configure the whole thing in Windows 10 and Windows 7. If you have Windows 8, everything will work out. I just don’t have this system installed on my laptop, so I won’t be able to show everything and take screenshots. In general, in the process of connecting a laptop to a monitor, it doesn’t make much difference what version of Windows you have installed. In most cases, it is enough to simply connect the monitor to the laptop via an HDMI cable, or VGA, DVI, USB Type-C. We will also talk about which connection interface is best to use and what cable (possibly an adapter) we need.

The computer does not see the second monitor - how to fix it

The reason why your computer refuses to work with the second monitor may be either a software or hardware problem, and therefore you will have to try different tips one at a time. We recommend starting with the simplest tips that will help you understand whether the second monitor is detected by the system at all. It often happens that Windows sees the second monitor, but it is disabled by software. In this case, enabling it will be as simple as changing a few parameters in your computer settings.

Which interface and cable should I use to connect my laptop to the monitor?

At this stage it is very difficult to give any specific and universal recommendations. For the reason that everyone has different laptops and monitors. Therefore, we will consider the most popular connection options. One of them should suit you.

The most optimal and common interface for connecting a monitor to a laptop is HDMI . It is available on almost every laptop and monitor. Even if your devices are not the newest. If you find an HDMI output on your laptop and an input on your monitor, then use that.

First, look at your laptop. I’ll show you everything using the example of two of my laptops. The new but budget Lenovo only has an HDMI output. As I already wrote, this is the optimal solution.

The second, older ASUS laptop has the same HDMI digital output and the now outdated VGA.

What other options might there be?

- Older laptops may only have a VGA output.

- It's rare, but sometimes laptops have a DVI output.

- On modern gaming laptops, in addition to HDMI, there may also be a mini Display Port.

- On new ultrabooks (mostly expensive models) there is no separate output for connecting an external monitor. There, a new USB Type-C port is used for these tasks. And since there are now very few monitors with a USB Type-C input, you will most likely need an adapter. For example, USB-C - HDMI.

We sorted out the laptop. Now look at your monitor. What connection interfaces are there? My monitor has two HDMI inputs and one VGA (D-Sub).

Since my laptop has an HDMI output, and my monitor has an HDMI input, of course I will use this interface to connect.

But, as you understand, this does not always happen. Let's consider these options:

- The monitor does not have an HDMI input. And there are, for example, only DVI and VGA. In this case, you can connect via VGA (if there is such an output on the laptop). But, if your laptop has HDMI, then it is best to use an HDMI to DVI adapter.

- The laptop only has HDMI, but the monitor has VGA. You can also use an adapter. There are enough of them on the market. But, since VGA is not a digital output, the picture quality may not be very good.

Simply put, when both devices do not have the same interfaces, then you need to look towards adapters. And it’s better not to use outdated VGA for connection. If there is such a possibility.

I think we've sorted out the connection interface. Depending on the selected interface, we will need a cable or adapter. I have a regular HDMI cable.

Very common. You can buy it at almost any digital store. Comes in different lengths. Just say that you need a cable to connect your computer to your monitor. I've been using the same cable for several years now.

Permission

Quite often, such problems occur when the picture quality does not match the capabilities of the monitor. In this case, the screen will not work or will begin to turn off with enviable frequency. To resolve this problem, just reboot the system and start it in safe mode. After that, just change the resolution to a lower one.

However, everything is not so simple. The fact is that modern operating systems return the original resolution after 15 seconds. Therefore, you need to click the “Save” button in time. In addition, the system may begin to persistently switch resolution when it detects more suitable parameters, in its opinion.

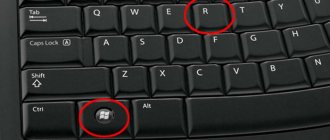

Another situation may also occur. For example, when the monitor does not show “signs of life” when the computer is running. In this case, it is possible that the user simply connected two monitors and then disconnected one of them, but for some reason the operating system did not receive this signal. In this case, it is recommended to hold down the Fn button on the keyboard (usually it is located to the left of the spacebar) and press F1, F12 or another key (depending on the laptop model).

Connect the monitor to the laptop via HDMI cable

It is recommended to disconnect both devices before connecting the cable. Honestly, I don’t turn it off. Nothing's burned yet