Data transmission is the process of transferring data in the form of signals from point to point or from point to several points by means of telecommunications over a channel. Dictionaries mention the borrowing (1640s) by scholars of the Latin word datum, meaning “thing”, “given”. Philosophy substantiates the connection between the concepts of information, knowledge, data, freedom, and gives examples. The height of the mountain primarily acts as data. The parameter is measured with an altimeter and the databases are filled in. The information received, taking on a specific form, decorates the book studied by the climber. An experienced mountaineer figures out the best way to reach the summit. Understanding the features of the process already becomes knowledge.

Freedom of choice immediately appears. The climber is free to decide, accepting responsibility. There are groups that have not returned.

Types of data

Historically, information has been presented in a variety of ways. Let us leave the hieroglyphs of the papyri to historians and examine modern techniques. The greatest impact was made by the development of electricity. If a person had learned to convey thoughts, the symbolism would have come out differently...

Analog signal

The first attempts to measure analog quantities are the experiments of Volta, who measured voltage and current. Next, Georg Ohm was able to estimate the resistance of the conductor. Analog values were used each time. The representation of the characteristics of an object in the form of current and voltage gave a powerful impetus to the development of the modern world. A cathode-ray kinescope with three-color pixel brightness displays a fairly clear picture.

The reasons for the move away from the analog signal were revealed by the Second World War. The Green Hornet system was able to perfectly encrypt information. A 6-level signal can hardly be called digital, but there is a clear bias. Historically, the first attempt to transmit a binary code is Schilling's 1832 experiments with the telegraph. In an effort to reduce the number of wires connecting subscribers, the diplomat recalled the binary number methods proposed by the priests. However, the introduction of digital transmission required humanity to travel over a century and a half.

Binary digital code

Binary number is well known. An analog value is represented as a discrete number, then encoded. The resulting set of zeros and ones is usually divided into words 8 bits long. For example, the first Windows operating systems were 16-bit; the processor's graphics module processed higher-bit floating point numbers. Even longer words are used by specialized graphics card computers. The specifics of the system determine the specific way of presenting information.

Data transfer allows humanity to move forward faster. People have different abilities. Not necessarily the best collector, custodian of information will be able to benefit (for himself, the planet, the city...). It makes more sense to pass it on. The modern world is called the era of the digital revolution. Historically, it turned out that it is easier to transmit binary data; a set of specific capabilities appears:

- Error correction.

- Encryption.

- Simplification of physical lines.

- More efficient use of spectrum, reduction of transmitter power, specific energy flux density.

- Error Recognition (EDC, 1951).

- Possibility of exact repetition and playback.

The second half of the 20th century provided hundreds of techniques for digitizing analog objects. The main feature of a binary signal is discreteness. The code is powerless to reliably convey an analog value. However, the sampling step has become so small that the error is neglected. A striking example is Full HD images. High screen resolution conveys the fine nuances of an object much better. At some stage, the resolution of digital technology overtakes the physiological capabilities of human vision.

Recommendations for choosing a solution

If we return to the general diagram of the corporate network (Fig. 1), we can see that different subsystems can be united by different, most suitable, communication channels. The connection method should be selected based on the requirements for the data network from the applications used in the company. The most typical solutions for each connection are shown in Fig. 6.

Rice. 6. Typical methods for combining separate networks of offices into a single KSPD

If it is necessary to combine main and backup data centers, you should use dedicated fiber-optic communication lines, through which you can transmit not only IP or Ethernet traffic, but also organize interaction between data storages, for example, using the Fiber Channel protocol. To connect large offices and connect them to a data center within one city, it makes sense to use your own or leased fiber optic communication lines or high-speed channels (from 100 Mbit/s and higher) offered by local telecom operators (usually these are solutions based on the provider’s Metro Ethernet network ).

To connect geographically remote offices, you can use L3 VPN services, and if they are unavailable, consider connecting via a FrameRelay or ATM network (although the latter are offered less frequently today than the former).

To connect mobile users, a connection is organized via a secure VPN channel via the Internet. The same type of connection can be used for small offices that need to transfer only small amounts of data that are not critical to delays and packet losses. The use of dedicated digital channels today may be due to either specific application requirements or the lack of the communication services described above in a certain region.

Etiology

The English usually use the plural – data. We ask Slavophiles to avoid reproaches. Modern science was developed by Europe, the heir of the Roman Empire. We will bypass the issue of deliberate destruction of national history, leaving the debate to historians. Some experts trace the etymology to the ancient Indian word dati (gift). Dahl calls data indisputable, obvious, known facts of an arbitrary kind.

This is interesting! Literary English (New York Times) deprives the word data of numbers. Use as necessary: plural, singular. Textbooks often make strict divisions. The singular number is datum. A separate issue concerns the article, which will not be discussed here. Experts tend to consider the noun “massive.”

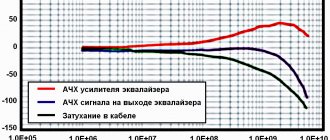

What do the spectra of an analog and discrete signal look like?

The image of signals can be represented as two functions. The figure clearly shows how a continuous signal differs from a discrete signal. The source voltage changes smoothly, while the processed voltage changes intermittently. The discrete spectrum periodically coincides stepwise with the continuous spectrum.

Discrete changes occur abruptly, after a certain period of time. The level in a digital system is encrypted and any voltage value is described in binary code. The smoothness of the transformation and the originality of the transmitted data depend on the frequency of measurements. The more accurately the signal level is described and the more often the measurement is carried out and processed, the more accurately the spectrum of the initial and transmitted signals matches.

The idea of openness

The idea of free access to information was put forward by the father of sociology, Robert King Merton, who observed the Second World War. Since 1946, it involves the transfer and storage of computer information. 1954 added processing capability. In December 2007, those who wanted to discuss the problem gathered (Sebastopol, California) and conceptualized open source software, the Internet, and the potential of the concept of mass access. Obama adopted the Memorandum of Transparency and Openness in Government Actions.

Robert King Merton

Humanity's awareness of the real potential of civilization is accompanied by calls to jointly solve problems. The concept of data openness is widely discussed in a document (1995) by the American Science Agency. The text touches on geophysics and ecology. A well-known example is the DuPont Corporation, which used some controversial Teflon production technologies.

Analog signal

This natural type of signals surrounds us everywhere and constantly. Sound, image, tactile sensations, smell, taste and brain commands. All signals that arise in the Universe without human participation are analog.

In electronics, electrical engineering and communication systems, analog data transmission has been used since the invention of electricity. A characteristic feature is the continuity and smoothness of parameter changes. Graphically, an analog communication session can be described as a continuous curve corresponding to the magnitude of electrical voltage at a certain point in time. The line changes smoothly, breaks occur only when the connection is broken. In nature and electronics, analog data is generated and distributed continuously. No continuous signal means silence or a black screen.

In continuous communication systems, the analogue of sound, image and any other data is electrical or electromagnetic pulses. For example, the volume and timbre of a voice is transmitted from a microphone to a speaker via an electrical signal. Volume depends on the magnitude, and timbre on the frequency of the voltage. Therefore, during voice communication, first voltage becomes an analogue of sound, and then sound becomes an analogue of voltage. In the same way, any data is transmitted in analog communication systems.

Terms

The term data transmission more often refers to digital information, including the converted analog signal. Science takes a broader view. Data is any qualitative or quantitative description of an object. An epic example is the information compiled by anthropologists regarding the rare peoples of the planet. Information is widely collected by organizations: sales, crime, unemployment, literacy.

Information transmission is a digital bit stream.

Metadata is a higher level of data that describes other data.

Data is measured, collected, transmitted, analyzed, presented in graphs, tables, images, numbers. Programmers are familiar with so-called ordinary files that lack formatting. The failed hard disk partition is labeled RAW. Formatting simplifies the transmission and perception of information. The design process is about visual, logical presentation. Sometimes information is encoded, providing protection and restoration of faulty areas.

Format is a way of presenting information.

A protocol is a set of interface conventions that define the order in which information is exchanged.

Channels (methods)

Information, spreading, overcomes the environment:

- Copper cable: RS-232 (1969), FireWire (1995), USB (1996).

- Optical fiber.

- Broadcast (wireless transmission).

- Computer buses.

The specificity of the environment imposes special features. Few people know that electric current is also carried by an electromagnetic wave. Air conductivity is much lower, which imposes specifics. The difference is offset by ionization, a phenomenon familiar to welders. The processes accompanying the movement of an electromagnetic wave are devoid of scientific explanation. Physicists simply state a fact, describing a phenomenon with a set of information.

For a long time, different frequencies were considered unrelated phenomena: light, heat, electricity, magnetism. It is important to understand: the set of environments is born of the evolution of technology. Surely other methods of data transfer will be discovered. The implementations of the environments are different, the set of standards is determined by specifics. Local connections often use WiFi technology based on the IEEE 802.11 link layer protocol. Cellular operators use completely different ones - GPS, LTE. Moreover, mobile networks are actively beginning to introduce IP, closing the circle, unifying the style of using digital equipment.

Why many protocols? Features of the implementation of data transmission via WiFi are powerless to cover significant distances. Transmitter powers are limited, packet structures are different. Bluetooth completely limits its basic capabilities to transferring a couple of files from a computer to a phone.

Formatting

Physicists quickly realized that direct information is poorly transmitted by the medium. Copper wire can carry speech, but ether quickly kills low-frequency vibrations. Popov was the first to think of modulating the carrier with useful information - Morse code. The meaning includes changing the amplitude of the radio wave according to the law of the message so that the receiving subscriber can extract and reproduce the message.

Developing broadcasting has created the need to improve techniques for equipping the carrier wave with useful information. In the late 20s, Armstrong proposed slightly varying the frequency, laying the foundation for the message. A new type of modulation has improved sound quality while successfully resisting interference. Music lovers immediately appreciated the new product.

The Green Hornet military system used a discrete frequency-shift keying technique - an instantaneous change in frequency according to the law of the transmitted message. The warring parties appreciated the benefits of communication. The implementation was hampered by the enormous size of the equipment (1000 tons). The invention of transistors changed the situation. Data transmission was becoming digital.

The basis of networks was laid by the American ARPANET. Packets began to be transferred from PC to PC. Then the first digital protocols began to be used on the network. Today, IP is taking over the mobile communications segment. Phones get their own addresses.

Access to the Internet

The organization of LAN communications via the Internet is becoming increasingly widespread along with the availability of the network itself.

Each company office currently has (or can easily get) its own dedicated Internet connection. After this, an encrypted VPN channel is organized between the office LANs, through which data is transmitted (Fig. 5).

Now the quality of Internet access in large cities is high enough to transfer data between company offices that is not sensitive to delays.

Rice. 5. Organization of KSPD via VPN channels to the Internet

The main disadvantage of connecting via the Internet, from a corporate point of view, is the lack of any guarantees regarding bandwidth, delays and packet losses.

The advantages of this connection are the following:

- high availability of Internet connection services and, as a result, fast channel deployment time;

- low connection cost;

- low cost of ownership of such channels (the central office in any case has access to the Internet, only individual offices need to connect).

As a consequence of these factors, connecting via the Internet can be used in the following cases:

- mobile users who do not have the need or ability to use other connection methods. As a last resort, it is possible to connect via a VPN channel after connecting to the Internet via a GPRS modem;

- home users or small offices who do not need to constantly transfer large amounts of data;

- as a temporary solution if at the point of opening a new office there are no other channels other than Internet access. Then, while a permanent channel is being organized, the office works with corporate data via the Internet;

- organization of a relatively cheap backup channel, when a VPN channel via the Internet is organized simultaneously with a dedicated communication channel and is used in case of failure of the latter.

When using Internet connections, special attention should be paid to security issues, since there is a possibility of both interception of transmitted data and penetration into the corporate network via the Internet. Therefore, the use of firewalls is mandatory. In some cases, even when connecting offices via the Internet, a corporate SPD model with centralized Internet access is used. In this case, all Internet traffic of the remote office passes through a proxy server installed in the central office.

Protocol Layers

Digital data transmission by modem was implemented in 1940. Networks appeared 25 years later.

Increasingly complex communication systems required the introduction of new methods for describing the process of interaction between computer systems. The OSI conceptual model introduces the concept of protocol (abstract, non-existent) layers. The structure was created through the efforts of engineers of the International Organization for Standardization (ISO) and is regulated by the ISO/IEC 7498-1 standard. Parallel work was carried out by the French CCITT committee. In 1983, the developed documents were combined to form a model of protocol layers.

The concept of the 7-layer structure is represented by the work of Charles Bachman. The OSI model includes experience in the development of ARPANET, EIN, NPLNet, CYCLADES. The line of resulting layers interacts vertically with its neighbors: the top one uses the capabilities of the bottom one.

Important! Each OSI layer corresponds to a set of protocols determined by the system being used.

In computer lines, a set of protocols is divided into layers. There are:

- Physical (bits): USB, RS-232, 8P8C.

- Channel (frames): PPP (including PPPoE, PPPoA), IEEE 802.22, Ethernet, DSL, ARP, LP2P. Obsolete: Token Ring, FDDI, ARCNET.

- Network (connections): IP, AppleTalk.

- Transport (datagrams, segments): TCP, UDP, PORTS, SCTP.

- Session: RPC, PAP.

- Representative: ASCII, JPEG, EBCDIC.

- Application: HTTP, FTP, DHCP, SNMP, RDP, SMTP.

Physical layer

Why do developers need a hundred standards? Many documents appeared evolutionarily, according to increasing demands. The physical layer is implemented by a set of connectors, wires, and interfaces. For example, shielded twisted pair cable is capable of transmitting high frequencies, making it possible to implement protocols with a bit rate of 100 Mbis/s. Optical fiber transmits light, the spectrum is further expanded, and gigabit networks emerge.

The physical layer manages digital modulation schemes, physical coding (carrier formation, information storage), forward error correction, synchronization, channel multiplexing, and signal equalization.

Channel layer

Each port is controlled by its own machine instructions. The channel layer shows how to implement the transfer of formatted information using existing hardware. For example, PPPoE contains recommendations for organizing the PPP protocol using Ethernet networks; the port traditionally used is 8P8C. Through evolutionary struggle, the “ethereal network” was able to suppress its rivals. The inventor of the concept, the founder of 3COM, Robert Metcalf, managed to convince several large manufacturers (Intel, DEC, Xerox) to join forces.

Along the way, the channels were improved: coaxial cable → twisted pair → optical fiber. The changes pursued the following goals:

- reduction in price;

- increasing reliability;

- introduction of duplex mode;

- increasing noise immunity;

- galvanic isolation;

- powering devices via network cable.

The optical cable has increased the length of the segment between signal regenerators. The channel protocol describes more the structure of the network, including encoding methods, bitrate, number of nodes, and operating mode. The layer introduces the concept of a frame, implements MAC address decoding schemes, detects errors, resends the request, and controls the frequency.

Network

The generally accepted IP protocol determines the structure of the packet and introduces a specific address from four groups of numbers that are known to everyone today. Some masks are reserved. Resource owners are assigned names according to DNS server databases. The network configuration is largely irrelevant. Weak restrictions are introduced. For example, Ethernet required a unique MAC address. The IP protocol cuts the maximum number of PCs to 4.3 billion. Humanity has had enough for now.

The network address is usually divided into domains. For technical reasons, there is no uniform correspondence to the four groups of numbers. The Internet itself is denoted by the abbreviation www (an abbreviation for the world wide web, otherwise known as the World Wide Web). Today, the Uniform Address (URL) omits trivial letters. This means that the person who opened the browser clearly intends to surf the World Wide Web from the computer.

Transport

The layer further expands the structure of the format. The formation of a TCP segment combines packets, simplifying the search for lost information and guaranteeing recovery.

Channel in the operator's packet network (Frame Relay, ATM)

Connecting offices through Frame Relay and ATM carrier networks was the most common in the recent past. In the general case, for a corporate customer, the connection diagram is as follows (Fig. 4): each office is connected by one (or several) ports to the customer’s data network. After this, virtual channels are organized within the customer’s network that connect its offices.

Virtual channels are configured programmatically and each has its own guaranteed data transfer rate, and the office just needs to be connected to the operator’s network with one port of the required bandwidth. Software configuration of virtual connections allows you to create new connections between offices and easily change the parameters of existing connections without changing the physical topology of the network.

Compared to a network built on dedicated links, where each dedicated link requires a physical port on each side of the connection, the number of required physical ports is significantly reduced. Due to this, each office can use simpler equipment or make do with fewer devices.

The reliability of this type of connection also increases. Since the operator’s network usually already uses its own mechanisms to increase fault tolerance, the customer only needs to reserve his own access equipment and the “last mile” from his equipment to the operator’s network.

The cost of such a solution for the customer is also usually lower than when using dedicated synchronous/asynchronous channels due to the following factors:

- less equipment needed;

- the cost of each virtual channel is lower than the cost of the corresponding physical channel (due to the use of underutilized bandwidth of some connections by others).

However, at present, such connections should be made only if any specific applications are used or when connecting new offices to a corporate network that is already connected using this technology, since in many consumer parameters such networks are inferior to IP VPN networks.

Typical Frame Relay channel speeds are up to 2 Mbit/s. Often these speeds are no longer sufficient for modern applications. ATM – from 2 to 155 Mbit/s, however, such connections are relatively rare, and the cost of an ATM port and channel exceeds the cost of IP/MPLS channels of similar speed.

In terms of security, virtual FR/ATM channels are somewhat inferior to leased lines. One client's Frame Relay network traffic is separated from another client's traffic and cannot enter that client's network. However, this separation is software and can be violated unnoticed by the user, for example due to operator error.

List of books to help you understand analog and digital signals

You can study and compare the principles of data processing and transmission in more detail by reading the following literature:

- Sato Yu. Signal processing. First meeting. / Per. from Japanese; edited by Yoshifumi Amemiya. - M: Publishing House "Dodeka-XXI", 2002. The book provides the basic knowledge about DSP methods. Addressed to radio amateurs, students and schoolchildren just starting to study data transmission systems.

- Introduction to digital filtering / ed. R. Bogner and A. Konstantinidis; translation from English - M: Publishing House "Mir", 1977. This book provides popular and accessible information about various data processing systems. Analogue and digital systems are compared, the pros and cons are described.

- Fundamentals of digital signal processing: Course of lectures /Authors: A.I. Solonina, D.A. Ulakhovich, S.M. Arbuzov, E.B. Soloviev, I.I. Hook. - St. Petersburg: Publishing house "BHV-Petersburg", 2005. The book was written according to a course of lectures for students of the State University of Technology named after. Bonch-Bruevich. The theoretical foundations of data processing are outlined, discrete and digital systems of various conversion methods are described. Intended for study in universities and advanced training of specialists.

- Sergienko A.B. Digital signal processing (second edition) - St. Petersburg: Publishing house "Piter", 2006. Electronic educational and methodological complex for the discipline "Digital signal processing". A course of lectures, a laboratory workshop and methodological recommendations for independent work are presented. Designed for teachers and self-study for undergraduate students.

- Lyons R. Digital signal processing. 2nd ed. Per. from English – M.: Binom-Press LLC, 2006. The book provides detailed information about DSP. Written in clear language and with plenty of illustrations. One of the simplest and most understandable books in Russian.

The good old analogue connection is quickly losing ground. Despite modernization and improvements, the ability to share data has reached its limit. In addition, old diseases remain - distortion and noise. At the same time, digital communications are free of these shortcomings and transmit large amounts of information quickly, efficiently, and without errors.